Vibrotactile System - Installation

Hardware

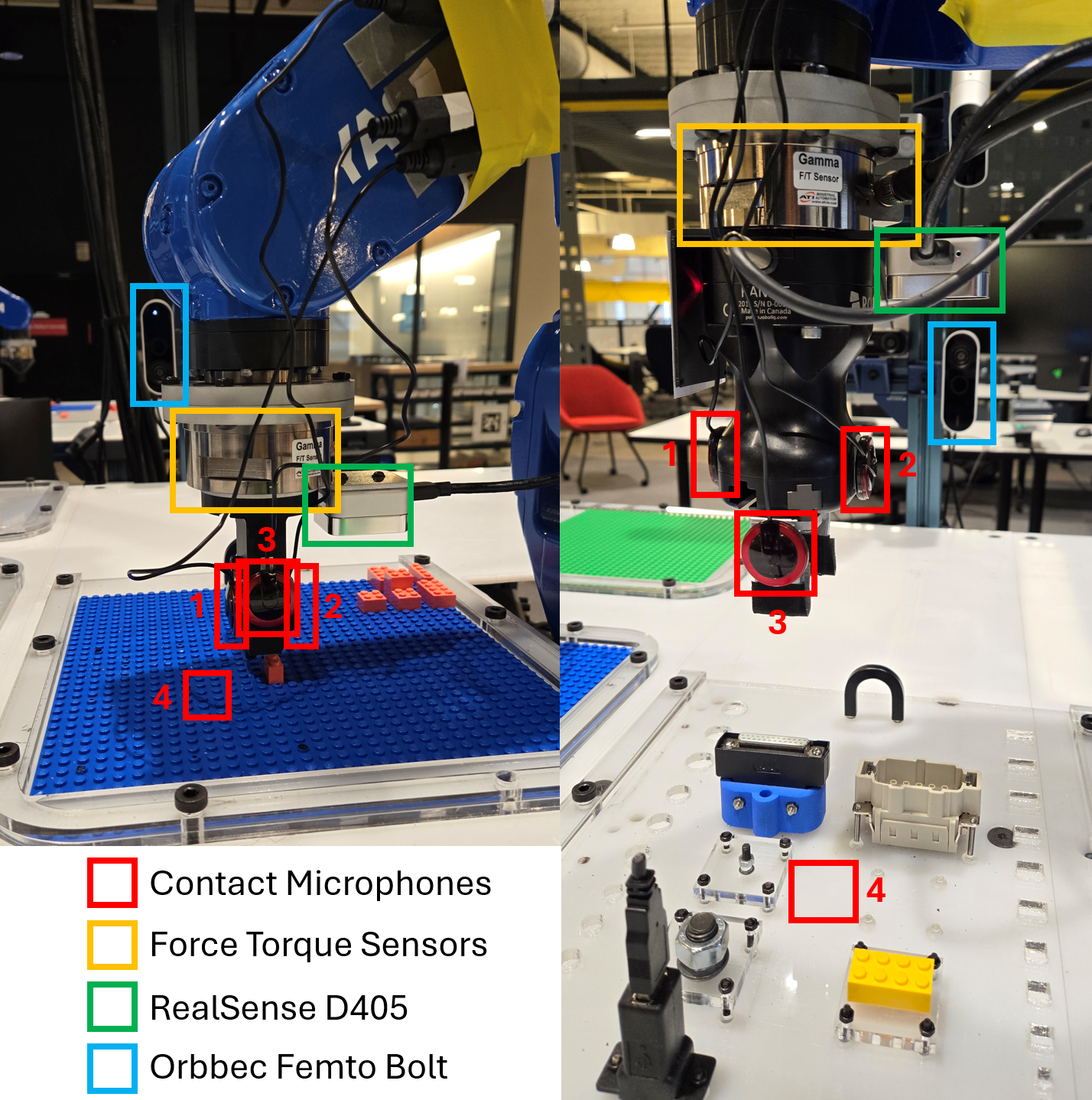

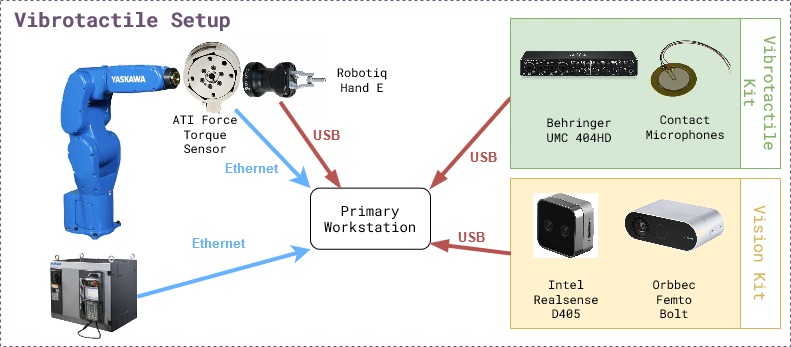

The figure below shows the experiment setup. The list of hardware components can be found below as well as in the BOM sheet.

Mandatory

Name |

Suggested Order Link |

Quantity |

Price |

Total Cost |

|---|---|---|---|---|

Behringer UMC 404HD |

1 |

$109.00 |

$109.00 |

|

5 Pack of Piezoelectric Contact Microphones |

1 |

$14.99 |

$14.99 |

|

Cable Matters 2-Pack 1/4 Inch Cable 6 Feet |

2 |

$9.99 |

$18.98 |

|

5952 VHB Tape: 2.5cm x 15 ft |

1 |

$13.99 |

$13.99 |

|

ATI Gamma Net Type SI-32-2.5 |

1 |

~$6000 |

~$6000 |

Optional

Name |

Suggested Order Link |

Quantity |

Price |

Total Cost |

|---|---|---|---|---|

Intel Realsense D405 |

1 |

$272.00 |

$272.00 |

|

Orbbec Femto Bolt |

1 |

$418 |

$418 |

|

Amazon Basics USB-A 3.0 Extension Cable, 3 Meters |

2 |

$8.54 |

$17.08 |

Software

There are two levels of software installation required for the vibrotactile system.

Relevant repositories:

motoman_ros1

vibro_tactile_toolbox

1. Device Interfaces

This includes the installation of the software required to interface with the hardware components like robots, cameras, grippers, etc.

ROS Nodes for the devices can be launched using docker-compose.yml in docker/ directory. It will need modification if using other hardware, like different cameras.

2. Vibrotactile System

This includes the installation of the software required to run the vibrotactile system which involves teach, learn and execute tasks described in the overview section.

Installation Steps

Step 1: Device Interfaces

Make sure the devices are connected and working properly.

Make sure you connect a monitor to the computer that is connected to the UMC404HD Audio Interface and log into Ubuntu once before following the instructions below.

Pre-requisite for the vibrotactile system is one robot arm, 2-4 contact microphones, one force torque sensor, one side camera and one gripper.

Software pre-requisites: Docker, Docker Compose, NVIDIA Container Toolkit

Make sure the motoman ros1 docker is working with a robot namespace.

Make sure the force torque sensor is publishing on the

/<robot namespace>/ftstopic.First decide where to install the vibro_tactile_toolbox.

cd /path/to/desired/folder git clone https://github.com/cmu-mfi/vibro_tactile_toolbox.git cd vibro_tactile_toolbox/docker

Next you will need to modify

vibro_tactile_toolbox/docker/vibrotactile.envROS_MASTER_URI=http://<your computer ip>:11311 ROS_IP=<your computer ip> NAMESPACE=<robot namespace> TYPE=NIST DATA_DIR=/path/to/desired/data/directory PROJ_DIR=/path/to/desired/folder/vibro_tactile_toolbox/ ROBOT_IP=<your robot ip>

Add environment variables to

~/.bashrcfile.cd vibro_tactile_toolbox/docker echo source $(pwd)/vibrotactile.env >> ~/.bashrc source ~/.bashrc

Afterwards you will build the dockers following the below commands:

bash build_docker.sh docker compose up --build -d

Note: If using different hardware, modify the

docker-compose.ymlfile accordingly.Step 2: Systems Check

Run system check test

bash $PROJ_DIR/convenience_scripts/systems_check.sh

Step 3: Modify/Create Config Files

You can look through and modify the config files in the config folder such as

nist.yamlandlego.yaml.Also if your end effector to robot transformation is different, you will need to modify the file

transforms/hande_ee.tfortransforms/lego_ee.tfto account for the differences.You will need to adjust the side camera view according to how you mounted the camera. To do so, you need to follow these steps:

Open a new terminal and run the following steps:

xhost + bash $PROJ_DIR/docker/new_terminal.sh rosrun vibro_tactile_toolbox determine_camera_parameters.py $NAMESPACE

Next click on the Rotate button on the top left of the window named RAW IMAGE until the image is correctly rotated to be upright. Then click on the location of the connector socket that will be used in the data collection. Check the image in the CROPPED IMAGE window. Once you are happy with the view, then press ‘q’ on the keyboard. The terminal will print out the correct camera parameters that you will need to copy into the

launch/orbbec.launchin the vibro_tactile_toolbox folder. In particular, you will need to modify therotation,x_offsetandy_offsetparameters. Then you will need to save the file and run the following commands to restart the docker containers.cd $PROJ_DIR/docker docker compose down docker compose up --build -d

Step 4: TEACH - Collect training data

NIST Connectors

The first step to teach a new connector is to place the connector at a consistent and repeatable location such as a corner. For this tutorial, I will be pretending to use an ethernet cable, but you can subsitute the word

ethernetwith whichever connector you would like.Next, you will need to open the robot’s gripper if you are using the Robotiq Hand E.

If the vibro_tactile_toolbox_container is already running, you can just create a new terminal using the command:

bash $PROJ_DIR/docker/new_terminal.sh

To open the Robotiq Hand E gripper, you need to run the following command in the docker container.

rosrun robotiq_mm_ros open_gripper.py $NAMESPACE

Then you will jog the robot so that when it closes it’s fingers, it can perfectly pick up the connector. You can test the position by opening and closing the gripper and seeing if the connector moves from the original position.

rosrun robotiq_mm_ros close_gripper.py $NAMESPACE rosrun robotiq_mm_ros open_gripper.py $NAMESPACE

After you are satisfied with the robot pose, you will need to run the

save_hande_poselaunch file to save the current robot pose.roslaunch vibro_tactile_toolbox save_hande_pose.launch namespace:=$NAMESPACE proj_dir:=$PROJ_DIR

The resulting saved pose will be located in the

$PROJ_DIR/transforms/folder in the filehande_world.tf. You can get there in a new terminal using the following command.cd $PROJ_DIR/transforms

Next you will need to create a new folder in transforms that represents the name of the connector such as ethernet.

mkdir ethernet

Afterwards, move the saved transform in and name it

world_pick.tfcd $PROJ_DIR/transforms mv hande_world.tf ethernet/world_pick.tf

Now you will need to close the robot’s gripper and then jog the robot to the designated place pose where the connector in the Robotiq gripper is fully inserted into the receptacle. Again you will run the

save_hande_pose.launchfile inside the docker container.rosrun robotiq_mm_ros close_gripper.py $NAMESPACE # JOG robot to placement pose roslaunch vibro_tactile_toolbox save_hande_pose.launch namespace:=$NAMESPACE proj_dir:=$PROJ_DIR

Then you will again move the

hande_world.tfpose file to theethernetfolder.cd $PROJ_DIR/transforms mv hande_world.tf ethernet/world_place.tf

Next step, you can take a look at the launch file

collect_nist_audio_data.launchinside of$PROJ_DIR/launchand make any desired modifications such as the number of trials to collect.Afterwards, you will restart all the docker containers using the following commands:

cd $PROJ_DIR/docker docker compose down docker compose up --build -d

Then once the docker containers have started, run the check again:

bash $PROJ_DIR/convenience_scripts/systems_check.sh

Finally to start the data collection, you will run:

bash $PROJ_DIR/docker/new_terminal.sh # OPEN THE GRIPPER rosrun robotiq_mm_ros open_gripper.py $NAMESPACE # MOVE THE CONNECTOR TO ITS RESET POSE roslaunch vibro_tactile_toolbox collect_nist_audio_data.launch namespace:=$NAMESPACE data_dir:=$DATA_DIR proj_dir:=$PROJ_DIR connector_type:=ethernet

Keep an eye on the robot and hold onto the connector when the robot releases it.

LEGO

For the lego task, we will be assuming that the lego board is fixed. You will need to register multiple positions on the board within a region.

First you will need to download my trained lego detector model from here. Then you will move it into a models folder in vibro_tactile_toolbox.

cd $PROJ_DIR mkdir models mv ~/Downloads/lego_model.pth models/

Next you will need to make sure the docker containers are closed with

docker compose downin the$PROJ_DIR/dockerfolder and edit thevibrotactile.envfile in thedockerfolder so thatTYPE=legoinstead ofTYPE=nist.Next you will need to remove all of the previously taught locations in the

$PROJ_DIR/transforms/T_lego_world/folder using the following command:rm -rf $PROJ_DIR/transforms/T_lego_world/*

Then you can start the docker containers again:

cd $PROJ_DIR/docker docker compose up --build -d

To register a lego pose, use the teach pendant to jog the robot so that it is pushing a 2x1 lego block down onto the lego board. Figure out the peg’s x,y location by using the top left peg of the board as 0,0 and decreasing x towards the robot and increasing the y location from left to right. Use the following command to save the lego pose:

bash $PROJ_DIR/docker/new_terminal.sh roslaunch vibro_tactile_toolbox save_lego_pose.launch namespace:=<robot namespace> proj_dir:=$PROJ_DIR x:=<current x position> y:=<current y position>

The lego pose will then be saved in the folder

$PROJ_DIR/transforms/T_lego_world/with the file namelego_world_<current x position>_<current_y_position>.tf.Next, you can take a look at the launch file

collect_lego_audio_data.launchinside of$PROJ_DIR/launchand make any desired modifications such as the number of trials to collect.Afterwards, it is very important to restart the docker contains using the following commands:

cd $PROJ_DIR/docker docker compose down docker compose up --build -d

Then once the docker containers have started, run the check again:

bash $PROJ_DIR/convenience_scripts/systems_check.sh

Finally to start the data collection, you will run:

bash $PROJ_DIR/docker/new_terminal.sh roslaunch vibro_tactile_toolbox collect_lego_audio_data.launch namespace:=$NAMESPACE data_dir:=$DATA_DIR proj_dir:=$PROJ_DIR block_type:=<your current block type>

Keep an eye on the robot and stop the data collection script if the lego flies off the board. If the lego vision predictions are incorrect, you will need to adjust the top_bbox and bot_bbox in the

lego_detectorinconfig/lego.yaml.

Step 5: LEARN - Train the models

If you want to download sample data, you can download them here and unzip them into your Documents/vibro_tactile_data folder.

NIST Connectors

To create the NIST dataset, you will need to modify the script in

convenience_scripts/make_nist_dataset.sh.Basically VOLS represents the volumes you have collected data at. CONNECTORS represents the connectors that you have collected data with. If you only collected

ethernetyou would only have “ethernet” in the (). VELS represents the velocities that you have collected data at. TRAIN_VS_TEST represents whether you have collected test data yet or not. If you haven’t you would just have “vel_” in there. Finally ROBOT_NAME is the robot’s namespace. For example, you could modify it to be:

VOLS=(75) CONNECTORS=("ethernet") VELS=(0.01) TRAIN_VS_TEST=("vel_") ROBOT_NAME="<robot namespace>"

LEGO

To create the Lego dataset, you will need to modify the script in

convenience_scripts/make_lego_dataset.sh.Basically VOLS represents the volumes you have collected data at. BRICKS represents the brick types that you have collected data with. If you only collected

2x1you would only have “2x1” in the (). VELS represents the velocities that you have collected data at. TRAIN_VS_TEST represents whether you have collected test data yet or not. If you haven’t you would just have “vel_” in there. Finally ROBOT_NAME is the robot’s namespace. For example, you could modify it to be:

VOLS=(75) BRICKS=("2x1") VELS=(0.01) TRAIN_VS_TEST=("vel_") ROBOT_NAME="<robot namespace>"

Training the outcome and terminator models

To train the audio outcome and terminator models, you will need to modify the script in

convenience_scripts/train_outcome_and_terminator_models.shandconvenience_scripts/test_trained_outcome_and_terminator_models.sh.Basically TYPES represents the type of connector you have collected data with and CHANNELS represents the audio channels you want to use. For example, training all of the audio channels for ethernet would result in:

TYPES=("ethernet") CHANNELS=("0,1,2,3")

On the other hand training all of the audio channels for lego would result in:

TYPES=("lego") CHANNELS=("0,1,2,3")

After you have made the changes to the `convenience_scripts”, you will need to again run:

cd $PROJ_DIR/docker docker compose up --build -d

Then once the dockers have been built, you will run:

bash $PROJ_DIR/docker/new_terminal.sh bash $PROJ_DIR/convenience_scripts/make_nist_dataset.sh bash $PROJ_DIR/convenience_scripts/train_outcome_and_terminator_models.sh

Step 6: EXECUTE - Validate the system

To download already pretrained models, you can download them here and extract them into the

$PROJ_DIR/modelsfolder.Next you will need to take a look at the

test_nist_audio_outcome.launchandtest_lego_audio_outcome.launchfiles and make changes depending on your preferences.Finally you will need to start the docker container using the following command for ethernet:

cd $PROJ_DIR/docker docker compose up --build

Finally to start the nist evaluation, you will run:

roslaunch vibro_tactile_toolbox test_nist_audio_outcome.launch namespace:=$NAMESPACE proj_dir:=$PROJ_DIR data_dir:=$DATA_DIR connector_type:=ethernet

For lego you will instead run:

cd $PROJ_DIR/docker docker compose up --build

and

roslaunch vibro_tactile_toolbox test_lego_audio_outcome.launch namespace:=$NAMESPACE proj_dir:=$PROJ_DIR data_dir:=$DATA_DIR block_type:=<your current block type>