LEARN - Training a model

Method

A trained model is used for estimating corss-pose between action and achor pointclouds. Details for the model can be found here: TAX-Pose Paper

Code Overview

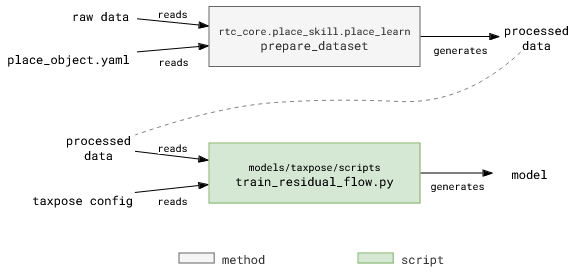

There are two steps involved in training a model, which is represented in the above image: preparing the dataset, and training the model.

Configuration Files

Prepare Dataset:

Same configuration file as the one used for collecting data.

Key parameters:

devices.cameras.<camera name>.setup: Camera calibrations are important to transform action object and anchor object pointclouds correctly.training.action.object_boundstraining.anchor.object_bounds: The bounding box is used to crop the pointcloud to the object of interest.

Train Model:

The configuration for training is defined under taxpose repo.

If defining a new task and/or a new setup, each folder in the

configdirectory should have respective object/task/scene definition.The

train_residual_flow.pyuses hydra to resolve the configuration files.Primary configuration file is under

configs/commandsdirectory that is linked above in first bullet.Key parameters:

dataset_root: Path to the train/test prepared dataset.min_num_points: Action and anchor pointclouds are downsampled to this number of points. Higher number of points will improve the accuracy but will increase the training time. So if the connectors have intricate features, it is better to keep this number high. Otherwise, a lower number of points will suffice. Reduce the number if you get error about points being lesser than the minimum number of points.batch_size: Number of samples in each batch. Higher number will increase the training time and memory requirements but will improve the accuracy. Batch size 8 with 2048 min_num_points required 20GB of GPU memory. So, if you have a smaller GPU, reduce the batch size.max_epochs: Number of epochs to train the model. 4000 epochs are more than enough to train the model. The model usually converges within 1000 epochs. More epochs, more training time.wandb.entity: Enter your wandb username. If you don’t want to use wandb (not recommended), set WANDB_MODE=disabled before running the script.

Note: We want to integrate training routine in rtc_core.place_skill.place_learn.learn method. This will allow the user to train the model using same script, and configure the training parameters in one configuration file. Contributions are welcome.

Try it out

…todo…